vistan

A simple Python library to run VI on Stan models

vistan uses autograd and PyStan under the hood. The aim is to provide a “petting zoo” to make it easy to play around with the different variational methods discussed in the NeurIPS 2020 paper Advances in BBVI. Please check out the repository for full details. Read more below for a brief overview.

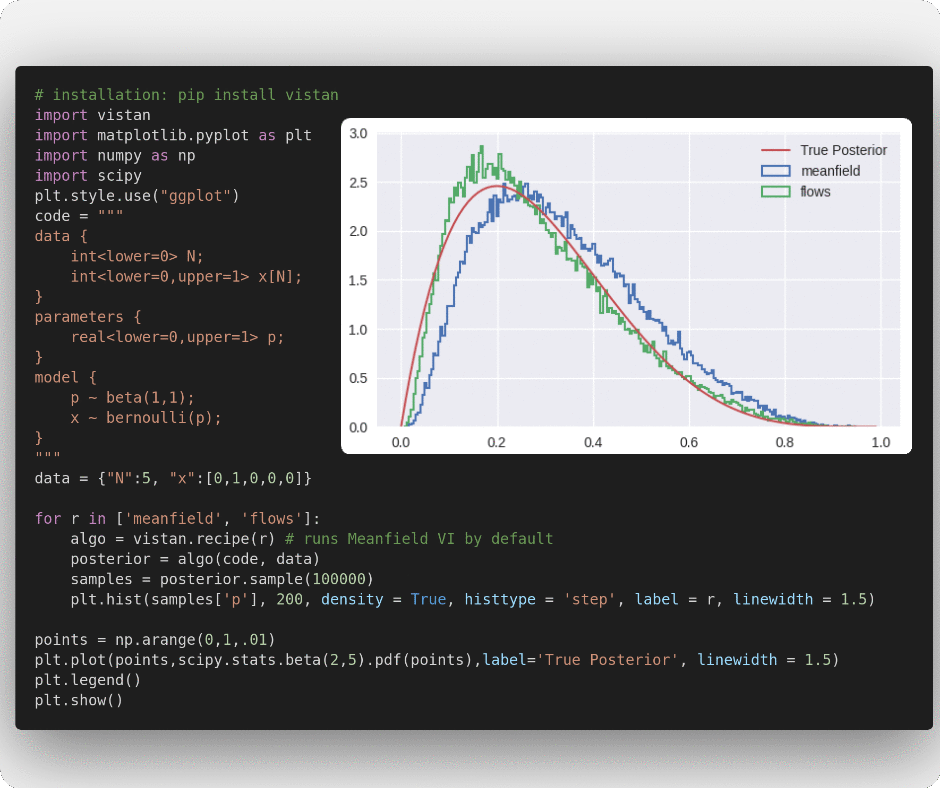

A simple beta-bernoulli example with vistan. The posterior predicted by the flows is more similar to the true posterior than meanfield. See paper for more details about the algorithms used.

Features

- Initialization: Laplace’s method to initialize full-rank Gaussian

- Gradient Estimators: Total-gradient, STL, DReG, closed-form entropy

- Variational Families: Full-rank Gaussian, Diagonal Gaussian, RealNVP

- Objectives: ELBO, IW-ELBO

- IW-sampling: Posterior samples using importance weighting

Installation

pip install vistan

Usage

The typical usage of the package would have the following steps:

- Create an algorithm. This can be done in two wasy:

- The easiest is to use a pre-baked recipe as

algo=vistan.recipe('meanfield'). There are various options:

-

'advi': Run our implementation of ADVI’s PyStan. -

'meanfield': Full-factorized Gaussia a.k.a meanfield VI -

'fullrank': Use a full-rank Gaussian for better dependence between latent variables -

'flows': Use a RealNVP flow-based VI -

'method x': Use methods from the paper Advances in BBVI where x is one of[0, 1, 2, 3a, 3b, 4a, 4b, 4c, 4d]- Alternatively, you can create a custom algorithm asalgo=vistan.algorithm(). Some most frequent arguments: -

vi_family: This can be one of['gaussian', 'diagonal', 'rnvp'](Default:gaussian) -

max_iter: The maximum number of optimization iterations. (Default: 100) -

optimizer: This can be'adam'or'advi'. (Default:'adam') -

grad_estimator: What gradient estimator to use. Can be'Total-gradient','STL','DReG', or'closed-form-entropy'. (Default:'DReG') -

M_iw_train: The number of importance samples. Use1for standard variational inference or more for importance-weighted variational inference. (Default: 1) -

per_iter_sample_budget: The total number of evaluations to use in each iteration. (Default: 100)

- The easiest is to use a pre-baked recipe as

- Get an approximate posterior as

posterior=algo(code, data). This runs the algorithm on Stan model given by the stringcodewith observations given by thedata. - Draw samples from the approximate posterior as

samples=posterior.sample(100). You can also draw samples using importance weighting asposterior.sample(100, M_iw_sample=10). Further, you can evaluate the log-probability of the posterior asposterior.log_prob(latents).

Citing vistan

If you use vistan, please, consider citing:

@inproceedings{aagrawal2020,

author = {Abhinav Agrawal and

Daniel R. Sheldon and

Justin Domke},

title = {Advances in Black-Box {VI:} Normalizing Flows, Importance Weighting,

and Optimization},

booktitle = {Advances in Neural Information Processing Systems 33: Annual Conference

on Neural Information Processing Systems 2020, NeurIPS 2020, December

6-12, 2020, virtual},

year = {2020},

}